Introduction¶

NaxRiscv¶

NaxRiscv is a core currently characterised by :

Out of order execution with register renaming

Superscalar (ex : 2 decode, 3 execution units, 2 retire)

(RV32/RV64)IMAFDCSU (Linux / Buildroot / Debian)

Portable HDL, but target FPGA with distributed ram (Xilinx series 7 is the reference used so far)

Target a (relatively) low area usage and high fmax (not the best IPC)

Decentralized hardware elaboration (Empty toplevel parametrized with plugins)

Frontend implemented around a pipelining framework to ease customisation

Non-blocking Data cache with multiple refill and writeback slots

BTB + GSHARE + RAS branch predictors

Hardware refilled MMU (SV32, SV39)

Load to use latency of 3 cycles via the speculative cache hit predictor

Pipeline visualisation via verilator simulation and Konata (gem5 file format)

JTAG / OpenOCD / GDB support by implementing the RISCV External Debug Support v. 0.13.2

Support memory coherency via Tilelink

Optional coherent L2 cache

Project development and status¶

This project is free and open source

It can run upstream Debian/Buildroot/Linux on hardware (ArtyA7 / Litex)

It started in October 2021, got some funding from NLnet later on (https://nlnet.nl/project/NaxRiscv/#ack)

An third party documentation is also available here (from CEA-Leti): https://github.com/SpinalHDL/naxriscv_doc

Why a OoO core targeting FPGA¶

There is a few reasons

Improving single threaded performance. During the tests made with VexRiscv running linux, it was clear that even if the can multi core help, “most” applications aren’t made to take advantage of it.

Hiding the memory latency (There isn’t much memory to have a big L2 cache on FPGA)

To experiment with more advanced hardware description paradigms (scala / SpinalHDL)

By personal interest

Also there wasn’t many OoO opensource softcore out there in the wild (Marocchino, RSD, OPA, ..). The bet was that it was possible to do better in some metrics, and hopefully being good enough to justify in some project the replacement of single issue / in order core softcore by providing better performances (at the cost of area).

Additional resources¶

RISC-V Week Paris 2022 :

Slides : https://github.com/SpinalHDL/SpinalDoc/blob/master/presentation/en/nax_040522/slides_nax_040522.pdf

Video : https://peertube.f-si.org/videos/watch/b2c577b1-977a-48b9-b395-244ebf3d1ef6

37C3 2023 :

Pipeline¶

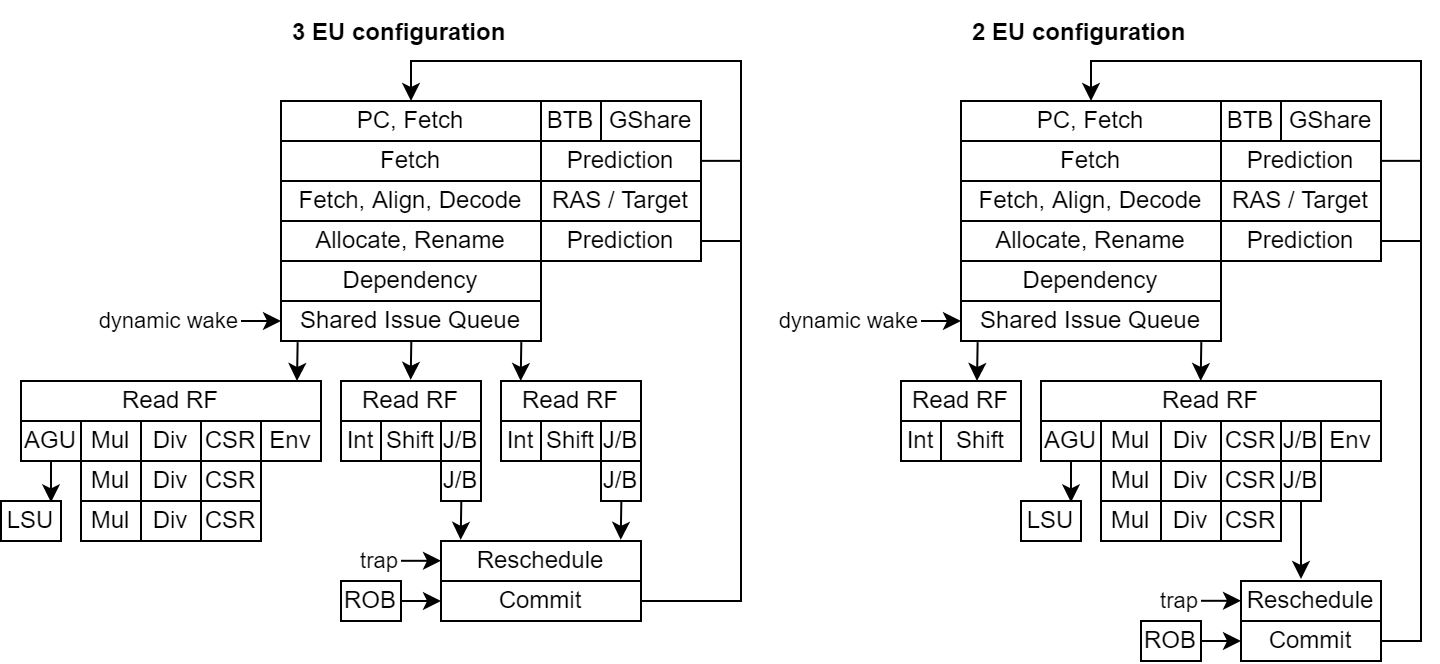

NaxRiscv is composed of multiple pipelines :

Fetch : Which provide the raw data for a given PC, provide a first level of branch prediction and do the PC memory translation

Frontend : Which align, decode the fetch raw data, provide a second level of branch prediction, then allocates and rename the resources required for the instruction

Execution units : Which get the resources required for a given instruction (ex RF, PC, ..), execute it, write its result back and notify completion.

LSU load : Which read the data cache, translate the memory address, check for older store dependencies and eventually bypass a store value.

LSU store : Which translate the memory address and check for younger load having notified completion while having a dependency with the given store

LSU writeback : Which will write committed store into the data cache

But it should also be noticed that there is a few state machines :

LSU Atomic : Which sequentially execute SC/AMO operations once they are the next in line for commit.

MMU refill : Which walk the page tables to refill the TLB

Privileged : Which will check and update the CSR required for trap and privilege switches (mret, sret)

Here is a general architectural diagram :

How to use¶

There are currently two ways to try NaxRiscv :

Simulation : Via verilator (https://github.com/SpinalHDL/NaxRiscv/blob/main/src/test/cpp/naxriscv/README.md)

Hardware : Via the Litex integration (https://github.com/SpinalHDL/NaxRiscv#running-on-hardware)

Hardware description¶

NaxRiscv was designed using SpinalHDL (a Scala hardware description library). One goal of the implementation was to explore new hardware elaboration paradigms as :

Automatic pipelining framework

Distributed hardware elaboration

Software paradigms applied to hardware elaboration (ex : software interface)

A few notes about it :

You can get generate the Verilog or the VHDL from it.

A whole chapter (Abstractions / HDL) of the doc is dedicated to explore the different paradigms used

Acknowledgment¶

Thanks to nlnet for the funding (see funding chapter)

Thanks to Litex for the SoC infrastructure

Thanks to the CEA for the additional documentation

Funding¶

This project is funded through the NGI Zero Entrust Fund, a fund established by NLnet with financial support from the European Commission’s Next Generation Internet program. Learn more on the NLnet project page.