Introduction

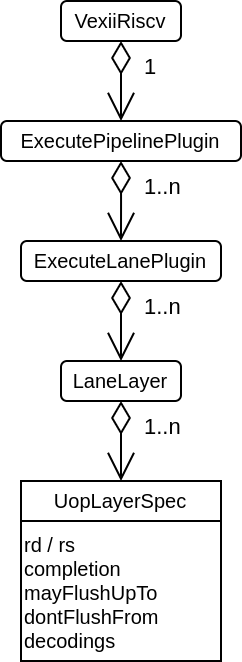

The execute pipeline has the following properties :

Support multiple lane of execution.

Support multiple implementation of the same instruction on the same lane (late-alu) via the concept of "layer"

each layer is owned by a given lane

each layer can implement multiple instructions and store a data model of their requirements.

The whole pipeline never collapse bubbles, all lanes of every stage move forward together as one.

Elements of the pipeline are allowed to stop the whole pipeline via a shared freeze interface.

Here is a class diagram :

The main thing about it is that for every uop implementation in the pipeline, there is the elaboration time information for :

How/where to retrieve the result of the instruction (rd)

From which point in the pipeline it use which register file (rs)

From which point in the pipeline the instruction can be considered as done (completion)

Until which point in the pipeline the instruction may flush younger instructions (mayFlushUpTo)

From which point in the pipeline the instruction should not be flushed anymore because it already had produced side effects (dontFlushFrom)

The list of decoded signals/values that the instruction is using (decodings)

The idea is that with all those information, the ExecuteLanePlugin and DispatchPlugin DecodePlugin are able to generate the proper logics to generate a functional pipeline / dispatch / decoder with no hand written hardcoded hardware.